Introduction to Web Content Categorization

We operate in two primary business domains, one is for network security which includes web and DNS filtering, parental controls, and malicious or phishing threat detection. The other is Ad Tech. At the core of both business segments is the zveloDB URL database, which houses the results of zvelo’s advanced AI-based website categorization platform. zveloDB is the market’s premium URL database and web content categorization service for domains, subdomains and IPs to power applications in Web and DNS Filtering, endpoint security, cyber threat intelligence, brand safety, contextual targeting, subscriber analytics, and more.

What is a URL Database?

A URL database is simply a database that houses a structured collection of URLs (Uniform Resource Locators) or web addresses that is designed to store and organize URLs for various purposes, such as indexing websites, website analytics, or for powering any number of different web filtering, endpoint security or ad tech applications.

What is Website Categorization?

Website categorization is the task of classifying a domain, URL, or webpage under a pre-classified category. These categories can range from Tennis to Medical to Adult to Malicious. The more extensive the category options, the greater the opportunity is for better control, targeting, or filtering based on a specified taxonomy. In some cases, applications may only require a very basic taxonomy for the broad categorization of base domains. In other cases, particularly for security applications, parental controls, or brand suitability, a more granular taxonomy with extensive topic categories may be better suited to the application.

How is Web Content Categorization Used?

One of the main use cases for web categorization is web filtering. In an enterprise environment, Web and DNS filtering can be used to block high risk or potentially dangerous DNS connections to malicious, phishing, and non-sanctioned content domains. Additionally, it can be used in parental control applications to enable parents to filter out objectionable content. Another example may be a classroom management solution that enables an online environment which would keep learners safe, organized, and engaged. And, in the Ad Tech industry, web categorization may be used to increase the contextual relevancy of advertisements, and thereby improve conversions for said advertisement. An advertisement about a basketball product is more likely to sell when the advertisement is placed on a basketball-related website—whereas conversions would decrease if the same advertisement was displayed on a website about sailing, or cosmetics, or tobacco. They also use those same categorizations for brand safety—protecting their advertisements from appearing on sites and pages that they would not want to be associated with.

Some Key Challenges to Web Categorization:

- Subjectivity in categories: One person might classify a website as a Blog but another might declare it to be News or Entertainment.

- Granularity of categories and hierarchical categories: Should a website be classified as something specific like “Tennis” or is “Sports” good enough?

- Speed & Scalability: There are nearly 2 billion websites out there so the solution has to be fast and far reaching!

- The internet is constantly changing: models need to be updated constantly.

AI and Machine Learning are central to web categorization. Humans can efficiently annotate some URLs, but it would never be cost effective to have humans classify ALL websites. Automation is a must.

The classification problem can easily be cast as a Bayesian inference problem. As a quick overview, Bayesian inference is a statistical method for calculating and updating the probability of a given hypothesis as additional information is gained. This form of inference derives its name from Bayes’ theorem—which describes the probability of an event given prior knowledge and conditions. For you history buffs, it is named after Thomas Bayes, who is credited for providing the first equation to update the probability of events by accounting for changing evidence.

So, in application, let’s say that we are trying to predict the probability that a particular website is part of the “Medical” category. We go to that website and see a rendered version of the underlying html. We can grab all of the html data along with any links and images associated with the website. Essentially, what we want is a mapping from all of the “features” that can be found in the website to the probability that a particular website belongs to each of the 497 categories. This is essentially a programmatic equivalent to what a human would do when they look at a webpage and try to fit it into a category. We want to know the probability distribution over the categories that we have defined given the features extracted from a website. What we want to find is a function f(features) that accurately maps the features to the probability of a particular category.

Selecting the top category is then as simple finding the category that has the highest probability given the features.

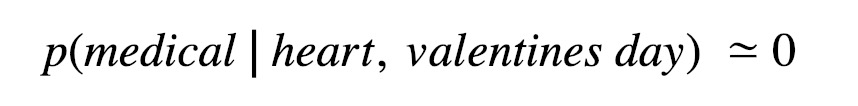

Let’s return to our example of estimating a probability for the “Medical” category. We have fetched and analyzed the page’s entire html document (including tags, css, text, and images). The English language is complex and full of exceptions and nuances, but let’s say we found the word “heart” within the text of the webpage. This might be a signal that the page “could” fit under the medical category as it’s discussing “heart attacks”, or “heart arythmia”. Alternatively, it could be describing Valentines day (i.e. a “Dating & Relationships” category) or perhaps it is being used as a metaphor for in a number of other language-specific contexts. For this reason, our model would assign:

Let’s return to our example of estimating a probability for the “Medical” category. We have fetched and analyzed the page’s entire html document (including tags, css, text, and images). The English language is complex and full of exceptions and nuances, but let’s say we found the word “heart” within the text of the webpage. This might be a signal that the page “could” fit under the medical category as it’s discussing “heart attacks”, or “heart arythmia”. Alternatively, it could be describing Valentines day (i.e. a “Dating & Relationships” category) or perhaps it is being used as a metaphor for in a number of other language-specific contexts. For this reason, our model would assign:

You can see how website categorization is highly dependent upon the context in which the word is found. For example, what if we also see the words, “Valentines Day”. Now we can be fairly certain that the site isn’t medical at all. Therefore:

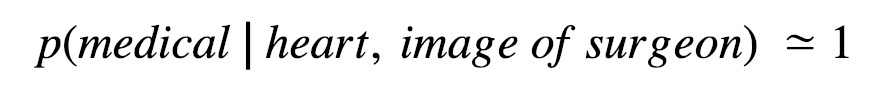

What if we see the word “surgeon” instead? Now the probability is likely to be higher. Especially, consider if we have a picture of a surgeon with a medical mask, then we are just about certain that the webpage belongs under the “Medical” web content category.

To calculate p(category | features) = f(features) we turn to machine learning.

Supervised vs. Unsupervised Machine Learning

Supervised machine learning involves collecting pre-categorized websites (training labels) along with the corresponding html and images for those websites (training features). We then “train” a model to create a mapping from the large number of features to the labels. Feedback is provided to the supervised model in the form of a loss function, where the model is penalized for incorrect answers and rewarded for correct answers. In this way, the machine learning algorithm slowly gets better as more and more labelled data enters the model.

On the other hand, unsupervised learning involves using only the training features WITHOUT labels to determine useful trends and “clusters” in the data. This method can work well if you have lots and lots of data and need a place to start; however, models will be much less accurate. And yes, it is exhaustive work to analyze and label the amount of websites that is required to achieve a state-of-the-art model.

At zvelo, we prioritize accuracy and strive to provide the best-in-class solution. That is why we focus on accuracy first, then move towards scalability. This means that supervised learning is our only option. That is also why we have established a dedicated internal team focused on creating reliable, consistent, and quality “ground-truth” examples.

Unfortunately, a machine learning model is never perfect and just like humans, mistakes happen. That is why it is essential to be constantly evaluating models against humans and vice versa. We are constantly testing our models against human verifiers to make sure that they are always up to date and accurate. When a human finds that the algorithm has made a mistake, this data is automatically incorporated back into the system so that the model can be retrained and avoid such mistakes in the future. Through countless hours of quality assurance and testing, we have found that this constant monitoring, flagging, and retraining process is key to building the quality of accuracy that our customers have come to expect.

We have now established how we extract information from a website, use data quality to ensure that we have annotated labels for training, and that we want to calculate the probability distribution over categories given those features.

How do we calculate probability distribution over categories? Which machine learning models do we use?

If you have read much about the machine learning space, you have probably heard of models like logistic regression, classification and regression trees, support vector machines, and maybe even more exotic models like random forests, gradient boosting trees, and deep neural networks. It is important not to get too bogged down in any particular model. Each has their own strengths and weaknesses.

That is why we use an ensemble method to combine a number of different models into one. The philosophy is that one model may be more adept at classifying a particular category or part of the webpage or specific feature than another. If this is true, then an ideal system would enable many models to work in concert and automatically determine when a model is better suited for a particular task than another. This approach also allows us to add new models to the system very easily. These models can be added and tested to determine the increase in accuracy on a test set. If the new model, significantly improves performance, then it will be added to the overall system and deployed to production. In this way, we have built an extremely flexible and adaptable model that can be automatically retrained, corrected by humans, and quickly refined as new models and research is released.

Thanks for reading and stay tuned for Part II where I’ll explore our approach to content translation—and its integral role in our web content categorization systems for non-English URLs.