Estimated Reading Time: 9 minutes

How Today’s Search Engines Make Finding Objectionable and Dangerous Content as Easy as 1-2-3

Search engines are at the very core of our digital experiences, both on the internet and in plenty of other services from cable to streaming video, and even our social media platforms. They provide critical functionality within nearly every operating system, networking tool, app, and website and are designed to help us explore and filter through a huge amount of content with only a few keyboard strokes, clicks, voice commands, or button presses.

Unfortunately, with the almost infinite amount of content available online, search engines that are designed to organize, tailor, and serve results based on nearly any input or request are producing many unwanted and dangerous results, including quick and easy access to pornography, child exploitation, terrorist-related, violent content, and more.

Whether it’s known as safe browsing, parental controls, filth filtering or some other term—failure to implement protections against unwanted search results can have significant negative consequences for the individual, the family, or the operator of the search service.

In this blog, we explore the growing security concerns presented by objectionable content and the impacts of search technology that was originally designed to provide fast and easy searching of nearly unlimited content—especially when that content includes the worst of what’s on the web. We will look at the consequences for families who aren’t taking steps to protect themselves, as well as what services and devices you should be aware of.

How Safe are Search Engines and Results in Home Services and Devices?

Early in January, TechCrunch published a scathing article and report it commissioned from online safety startup, AntiToxin. The article detailed how the search engine Bing (from Microsoft) has failed to put proper safeguards in place to block objectionable content in searches, specifically illegal child exploitation imagery. What’s worse, the Bing search engine seems to have amplified the problem by actually aggregating photos and content, making it easier for criminals to browse illicit content, and even recommending them in other searches. This incited anger and backlash from the community and internet watchdogs who believe and continue to lobby that large tech companies, cable operators, and others that offer or embed search capabilities in their offerings, simply aren’t doing enough or reinvesting their massive profits into implementing proper security measures.

But Microsoft isn’t the only tech company in hot water, struggling to solve the problem. Other applications, including WhatsApp, have recently come under fire for not properly securing and policing their service. In late 2017, YouTube came under similar scrutiny when it was found quality ratings standards for the major streaming platform were confusing and inadequate. This further stoked the conversation around how human-training efforts create problems in AI implementations, particularly when it doesn’t meet acceptable standards for identifying and blocking objectionable material.

There is a growing list of streaming services and companies that have been excoriated for their poor protections or mishandling of objectionable content. It’s an unfortunate misuse of technology that criminals can leverage the power of these tools and the internet for illegal online behavior. It’s also unfortunate that groups of people who participate in illegal activities end up flocking to the tools and platforms that are found to not put sufficient levels of security and protections in place.

There have been efforts to regulate, create protections, and block illicit content (especially sexual and exploitative)—but until now, none have made a significant impact and have only caused further confusion and uncertainty.

In the United States last year, Congress and the Senate passed FOSTA-SESTA, a two bill package designed to curb sex trafficking and illicit activity online as well as making it illegal to knowingly assist, facilitate, or support sex trafficking. So far, it’s had a significant impact on sites like Reddit, Craigslist, Pounced.org, and more. The legal changes have forced hosting companies to remove “personals” sections where they cannot effectively monitor content posted by their users.

Source: Craigslist FOSTA Disclaimer

That’s thanks to a significant change it makes from Section 230 of the 1996 Communications Decency Act, whereby FOSTA-SESTA places the burden of responsibility for illegal content directly on the platform or host. While proponents have praised our political system for being effective enough to pass a bill, many among the tech community continue to argue that it puts an increased burden of risk on tech startups, smaller companies, and innovation and is ultimately destructive for the growth and economy of the internet.

On a positive note, there are a number of organizations that work tirelessly to combat child exploitation and abusive online imagery. In fact, zvelo is a proud partner of the Internet Watch Foundation (IWF) and other watchdog groups—who work with global technology companies to police, report, and remove this type of illegal content from the internet.

So what’s the answer? How can tech companies, cable operators, and others balance ease of use and safety in their search capabilities? We believe that it will be increasingly important for every provider of search services, whether through a browser, voice search, or a mobile app to take steps to ensure the proper filtering of objectionable, unsafe, and exploitative search results.

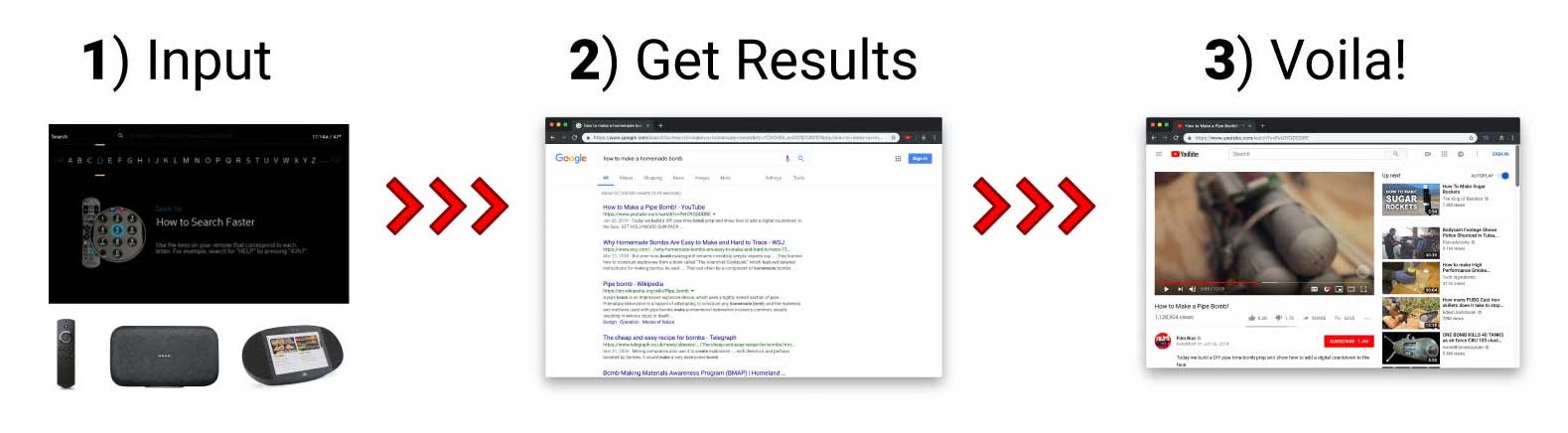

Today’s Search Makes Finding Objectionable Content as Easy as 1-2-3

Search tools built into our everyday household devices and services make it increasingly easy to find content that is not family or child-friendly. Take a look at the below diagram. If we wanted to find information, for example, on how to make a deadly weapon from household items—we could simply type/speak a search AND—Voila! The rest is taken care of for us.

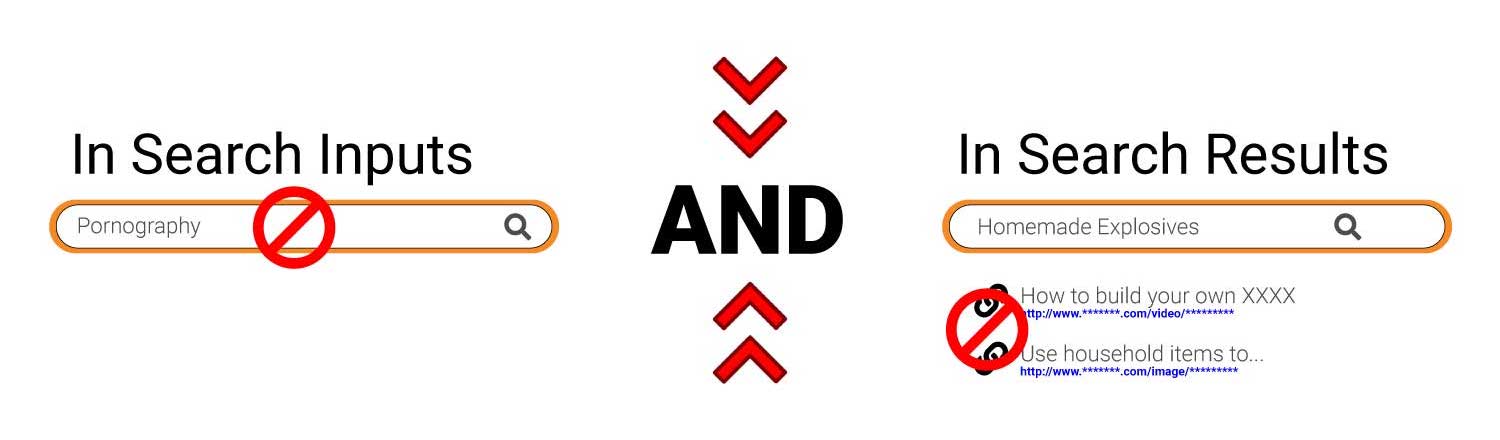

However, by implementing safe search and filth filters at two levels (in Search Inputs AND in Search Results) Multiple Service Operators (MSO’s) such as cable companies, streaming content providers, etc.—as well as device makers—can significantly reduce the ability of their services to be misused. Especially when we’re talking about families and children using these services.

Impacts & Consequences of Unsafe Search Results

If search results are not protected and proper mediation are not in place, they can return all forms of objectionable, unsafe, and exploitative content. In this situation, they ultimately serve as curators of objectionable content, providing easy access and in some cases, even recommending other harmful results. This is particularly troubling and problematic as search engines are increasingly able to index each and every dark corner of the web, from websites to video to apps to OTT and more. With a quick search, someone can find every source of porn, hate, child exploitation, jihadism, bomb-making and more—all accessible with a single click.

But, what is considered to be “objectionable” may vary slightly based on cultural norms, as well as regional laws and mores. Topics and content related to pornography, abortion, tobacco, and weapons may not be illegal but are considered unsuitable for children and even young adults. Other topics are more widely accepted as objectionable for all audiences. These topics include content depicting child exploitation, abusive sexual acts and violence, as well as terrorist-related content and propaganda. These forms of objectionable content have even more damaging consequences and demand a higher level of categorization accuracy and scrutiny to provide proper protections for users. Depending on local and regional laws, even being in possession of these types of content may be illegal, while any act of sharing or disseminating content (even a link) can be criminal and harshly punished. How will local laws continue to change and what is considered content? Could a browser cookie from a site that is known to host objectionable content be considered illegal?

Because of the complexities involved with certain types of content, as well as differences in local filtering protections required for specific implementations—how search results for potentially objectionable topics are returned, filtered, or displayed require a wide range of considerations. In some circumstances, search results for pornography, child sexual exploitation, and certain other sexual-related content should be blocked because of the illegality of accessing such content. But what about sex education? Blocking or omitting educational content from search results because it includes diagrams or references sexual activity or anatomy would be unintended censorship, and fail to meet the user’s intended search intent. For these reasons, a high level of granularity AND accuracy is a critical precursor to any viable form of filtering protection for search.

Harmful Effects on the Family and Childhood Development

Legal concerns aside, objectionable content can have damaging effects to children’s psychological development as well as long-term mental health. Studies have found that exposure to sexual and violent content—especially at an early age—has a significant negative impact on the development of healthy self-esteem and relationship-building skills. Pornography and other sexually suggestive material may lead children to explore sexual behaviors at an earlier age and participate in high-risk sexual activities, as well as drugs and alcohol.

Meanwhile, violent media online and on the television can cause stress for children, who may be more fearful of the world around them, more desensitized and less empathetic towards the feelings of others, and manifest itself later in the form of violent behavior.

“Research by psychologists L. Rowell Huesmann, Leonard Eron and others starting in the 1980s found that children who watched many hours of violence on television when they were in elementary school tended to show higher levels of aggressive behavior when they became teenagers.”

Source: https://www.apa.org/action/resources/research-in-action/protect.aspx

In an age where connected-devices and internet continue to make their way into all aspects of our lives, we believe it’s important that there be discussions about adequate protections and safe search in all services and devices, from the desktop and mobile phone browsers, to the voice-activated search embedded in cable remote controls and smart home assistants. The consequences don’t just fall on individuals and families—but our society in general—and are ultimately paid for by state and federally funded social programs.

At-Risk Services & Devices

So what services and devices are we referring to? Safe search isn’t just critical in our web browsers and on computers, laptops, and smartphones. The advances and adoption of smart home cable remotes and smart assistants like Alexa has brought voice search into the mainstream over the past couple of years. We’re now connected by voice to our smartphones, kitchen appliances, and more.

Parents are encouraged to demand and implement safe search controls for children under 13 wherever possible. We’ve listed some of the devices with embedded search parents should be aware of below. Many may already be on a family’s radar, while others, particularly with the integration of voice search, might surprise you:

- Desktops, laptops, smartphones, and tablets

- Smart TVs, streaming boxes, etc.

- Smart assistants, speakers, and smart displays

- Cable, Satellite, DVR, and other similar services offering remotes with voice search capabilities

- Music streaming services

So Who’s Responsible For Safe Search Results

The reality is that the burden to vet all household services and devices falls upon parents and guardians. This is why it is so important that responsible service providers and device makers integrate filth and objectionable content filtering in their search services as standard capabilities. These will eliminate the worst types of content from being returned in search results.

Implementing Safe Search Capabilities

Our approach to the problem utilizes the largest, most comprehensive, and most current database of objectionable search terms which can be integrated with any search engine. Leveraging the same technologies used in our market-leading content categorization services—zvelo is able to identify new search terms, including single words, multiple word phrases, slang terms, and more that can be immediately propagated to partners for use with filtering their search results.

zvelo serves a wide range of partners across diverse network security, advertising, MSO’s, mobile, and other industries who place a premium on highly accurate and comprehensive classification of content, malware and phishing detection.

zveloCAT, our cloud-based categorization system, classifies web content into nearly 500 highly granular topic-based, malicious, and objectionable categories. In coordination with other microservices (i.e. image analysis), human-supervised machine learning, dozens of third-party feeds, and our partners like the IWF, zvelo helps service providers implement safe search tools and “filth filters” delivering increased insight, control, and protection for users.

If you’d like to learn more about how zvelo is helping to protect search results in products around the world, contact us.